Recently I had a chance to play a little bit with the open source permissioned JVM based blockchain platform Corda. I was surprised to discover how it blends blockchain ideas with the commodity middleware technology and creates a new brief of decentralized enterprise integration. Below are my first impressions from it along with an Apache Camel connector contribution.

What is Corda?

Corda is a decentralized database and business process platform designed and built from the ground up for the implementation of legal agreements among identifiable parties. It is a DLT implementation heavily influenced by the Bitcoin's UTXO model and driven by the "enterprisy" requirements of the financial industry. Corda is written in Kotlin, runs on the JVM and uses many of the proven middleware technologies. As such, compared to other blockchain platforms, Corda offers a low-entry barrier for Java developers experienced with integration, messaging and business processes management.Design Principles

- Permissioned (instead of permissionless network such as Bitcoin, Ethereum, etc) - this is a no surprise as enterprise blockchain use cases are primarily focused around automating the business integration challenges among identifiable parties.

- Point-to-point (instead of global transaction broadcasts as in Bitcoin and others) - this enables data to be shared only among the nodes that need-to-know it which also leads to improved privacy and scalability.

- UTXO model similar to Bitcoin (instead of the account model of Ethereum) - which is the part that makes Corda a DLT/Blockchain rather than a distributed business process management platform.

- Re-use (instead of building everything from scratch) - this is the favorite part of Corda for me. Reuse of the Java ecosystem, reuse of relational databases, reuse of the messaging systems, etc.

Main Concepts

- A permissioned network made up of point-to-point communicating nodes.

- A ledger where each node maintains its unique database, rather than a single store.

- Notary nodes that prevent double spends and validate transactions.

- Oracle services that only sign transactions if the included facts are true (slightly different to typical oracles).

- State objects are immutable that represent on-ledger facts. The state is modified through transactions and stored on owning nodes only.

- Contracts are deterministic JVM based functions that validate the transactions.

- Transactions are candidate updates to the ledger and must be contractually valid and signed to be committed.

- Flows encapsulate business processes and abstract all the networking, I/O, storage and concurrency. All smart contract activity occurs within the scope of flows which can be started through RPC calls or other flow calls. Flows do not run within sandbox as in the case of contracts but executed as regular Java code.

A Corda Flow that interacts with Node A, Node B, and the Notary Pool

In Ethereum, the concepts of smart contracts encapsulate both the business logic and the state into one. In Corda, state and contract objects are separate concepts: the state is persisted, and contracts are deterministic functions (meaning all transaction validations that are performed by the contracts on different nodes and should produce the same result).

In addition, Corda introduces the concept of a Flow (kind of a distributed orchestration engine), which in Ethereum world would be similar to contracts calling each other (kind of choreography). But Corda flows are not deployed into all nodes, they are not part of the shared state, but rather represent standard JVM code, specific to individual nodes.

Technology Stack

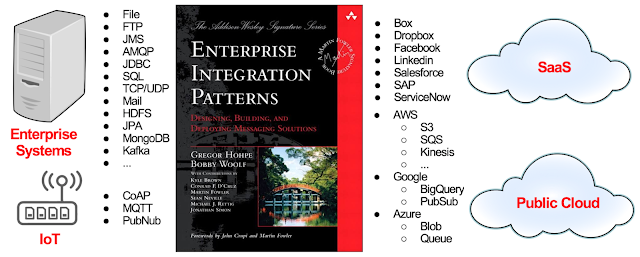

Driven by the "re-use" principle, Corda is reusing existing storage, messaging and Java solutions. While blockchain platforms such as Quorum take a permissionless PoW framework such as Ethereum, and make it "enterprisy" by replacing the consensus mechanism, removing gas payments, introducing private transactions, etc., Corda takes the opposite approach. Corda, takes existing middleware technologies and applies the Bitcoin concept of UTXO and creates a new class of software that can be described as "a distributed business process and state management system". Corda achieves that through the use of commodity technologies such as relational databases for storage, and messaging for state replication and distributed business process coordination.

Main components of Corda

- It builds with Gradle, requires Oracle JDK 8, runs on Docker (and Linux on production).

- A Corda node is a flat classpath JVM application (no Spring Boot, App Server, or OSGI container required).

- Storage: relational database - H2, PostgreSQL, SQL Server, OracleDB.

- Object-Relational Mapping: JPA - JBoss Hibernate.

- Messaging: AMQP based - Apache ActiveMQ /Artemis.

- Metrics: Jolokia

- Other: Quasar, Kryo, Shiro, Jackson, etc,

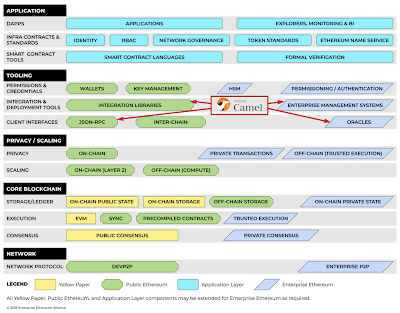

Apache Camel Integration with Corda

Driven by my background in enterprise integration and interest in the blockchain, recently I created and wrote about Apache Camel connector for Ethereum and Quorum. In the same spirit of exploring enterprise blockchains, I created an Apache Camel connector for Corda. The connector uses Corda-RPC library and provides Camel producer/consumer endpoints to interact with a Corda node. The component offers a consumer for signing up and receiving events from a Corda node, and producer to send commands to a node. |

| Apache Camel connector for Corda |

Consumer: vaultTrack, vaultTrackBy, vaultTrackByCriteria, vaultTrackByWithPagingSpec, vaultTrackByWithSorting, stateMachinesFeed, networkMapFeed, networkMapFeed, stateMachineRecordedTransactionMappingFeed, startTrackedFlowDynamic.

Producer: currentNodeTime, getProtocolVersion, networkMapSnapshot, stateMachinesSnapshot, stateMachineRecordedTransactionMappingSnapshot, registeredFlows, clearNetworkMapCache, isFlowsDrainingModeEnabled, setFlowsDrainingModeEnabled, notaryIdentities, nodeInfo, addVaultTransactionNote, getVaultTransactionNotes, uploadAttachment, attachmentExists, openAttachment, queryAttachments, nodeInfoFromParty, notaryPartyFromX500Name, partiesFromName, partyFromKey, wellKnownPartyFromX500Name, wellKnownPartyFromAnonymous, startFlowDynamic, vaultQuery, vaultQueryBy, vaultQueryByCriteria, vaultQueryByWithPagingSpec, vaultQueryByWithSorting.

To find more about Camel, and how it can complement Corda solutions, read the Camel Ethereum connector article linked above.

Conclusion

Public permissionless blockchains are facing serious technical challenges in the form of scaling, governance, energy waste and non-technical challenges with speculation, regulation, general usefulness and applicability. They have the noble idea of decentralizing everything but are yet to prove that the technology and the economic models are capable of delivering that vision.On the other hand, private permissioned blockchains such as Corda, Fabric, Quorum are immune to these technical challenges as they target use cases with a smaller number of identifiable parties in regulated markets. Their goal is to improve and automate the existing business models of the enterprise rather than trying to discover brand new economic models. In a sense, permissioned blockchains represent the next generation cross-organization business process and data integration systems.

In this space, Corda is not revolutionary, but rather an evolutionary platform built on top of a well-estabilished storage and middleware technology ecosystems. The blockchain technology still has to prove itself, and building on top of a proven technology is the first step.

My next stop on exploring enterprise blockchains will be Hyperledger Fabric. Take care.